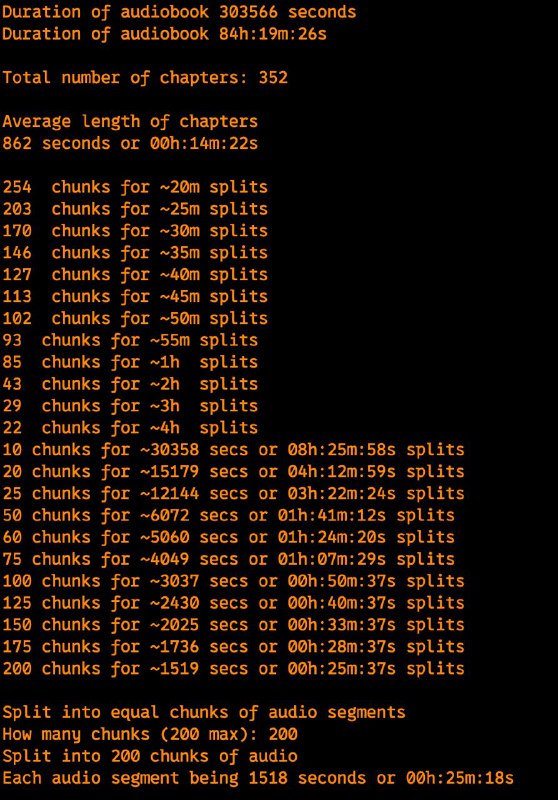

This particular audiobook I'm transcribing is 84 hours so 200 chunks is 25m each. Found out if using large with ComputeAllUnites for CoreML with large model works fine but can't really use M1 for anything else so using -ng (no graphics) option allows one to keep using laptop and whisper.cpp goes a tad slower. Worth it for me though.

>>Click here to continue<<